AI Safety IV: Sparks of Misalignment

This is the last, fourth post in our series...

OpenAI’s o1-preview has been all the buzz lately. While this model is based on the GPT-4o general architecture, it boasts much improved reasoning capabilities: it can ponder the question for about a minute, reason through multiple possibilities, and arrive at solutions that could not be generated from a single try of GPT-4o. In this post, I discuss the o1-preview model but mainly present the most striking advantage of o1-preview over all previous LLMs: it can meaningfully answer questions from a quiz game called “What? Where? When?”. At this point, it probably does not sound all that exciting compared to winning math competitions and answering PhD level questions on science, but let me elaborate.

There have already been many responses to OpenAI’s o1-preview, and this post is also one of them. We will discuss the model and what new opportunities it offers below. But first and foremost, this is a post of love for a game called “What? Where? When?” (“Что? Где? Когда?”), usually abbreviated to ЧГК in Russian; I don’t expect the English acronym WWW to catch on but I’ll stick to it throughout this post for brevity.

The rules are simple: teams of at most six players are answering questions. The question is read for all teams, they are given one minute to discuss and arrive at the answer. During the discussion, access to the Web or other reference material is not allowed. At the end of a minute, the teams are given another 10 seconds to write down their answer on a piece of paper, the answers are collected, and the correct answer is announced. The team that has answered the most questions correctly wins. I’ve made an illustration just in case, but the rules are really very, very simple:

What differentiates WWW from just about every other pub quiz in existence is the style of questions. Here is one (to avoid authorship issues, all examples are questions that I personally wrote, usually at some point between 2005 and 2015 when I was actively preparing questions for the game):

The Sunday Times wrote about this person, born in the 1930s, that his work represents a ceiling for wide audiences, even though in principle no one is stopping you from consuming more elite art. Write the last name of this person.

Naturally, you are not supposed to know what The Sunday Times wrote at some unspecified point of time. Instead, the question is supposed to draw on your general knowledge but also require nontrivial logical and intuitive jumps to arrive at the answer. At the same time, the answer should be unique and no other answer should fit the question; appeals are allowed for cases when this principle is violated, because the question’s author sometimes cannot think of every possible alternative.

Try thinking about the question above by yourself for a little while before reading on. What are your thoughts?

A seasoned WWW player could reason through this question somewhat like the following:

As you can see, this is definitely not direct trivia. When you think about a WWW question, you have to make a lot of assumptions and jumps that are not directly supported by either facts or logic. An important skill is to reason backwards from the question: why it is phrased in the exact way that it is, what the author has been trying to convey; in the example above, this reasoning singles out the word “ceiling”.

In this post, I’m describing the “competitive” version of the game, where multiple teams compete against each other, but I have to note that it originated from a Russian TV show called What? Where? When? where a single team of six players answers questions sent in by the viewers (naturally, the questions are selected and edited in advance, otherwise it wouldn’t be a fun game at all). This is literally the longest running show on Russian television, originally airing in 1975 and not changing too much since then. In 2010, ABC licensed What? Where? When? under the name Million Dollar Mind Game, and while they did a good job capturing the style of questions and the spirit of the game (you can find the show on YouTube, in 360p quality alas), it didn’t take in the U.S. and was canceled after a season or two.

I have been playing WWW for… God, how time flies, for 25 years already. To me, this is a perfect hobby because while it is competitive, it not only never gets boring itself—questions never repeat—but also does not involve any boring preparation bits that basically any other sport would. Scrabble tournaments make you memorize dictionaries (in a famous case, Nigel Richards won the French-language Scrabble World Championships without any knowledge of the language!), and Jeopardy pros learn a huge amount of trivia, but here, while a high level of general knowledge is required, you mostly learn it by osmosis through playing the game itself. Simply memorizing a lot of facts won’t get you very far in the game.

Ever since I started working in machine learning and natural language processing specifically—another “time flies” moment, that was almost 15 years ago—I have been testing whether ML models could be any good in WWW. Naturally, they never could. There was absolutely no way an NLP model of the 2010s would actually understand what’s required in a question. You can google the answer fairly often because WWW is mostly a game based on real facts about the real world; in the example above, the “ceiling for wide audiences sunday times” Google query gives me the correct answer at #2 in the list. But even if you gave models access to the Internet, there was no way models would understand what to google for and how to extract the correct answer from retrieval results. This was just way, way above the capabilities of AI models of the time, it always looked like something from a very distant future.

When LLMs came about, I also tried to ask them questions like the above. And they also inevitably failed. LLMs know a lot of facts, and some of them can google behind the scenes (recall our post on RAG), so sometimes they would answer a question simply because they knew the factual answer. But none showed any capability to actually play the game; even a month ago, WWW looked like it would be possible only some relatively long time in the future.

Well, the future is here now. OpenAI’s new model, o1-preview, can play WWW. Moreover, o1-preview can show you its chain of reasoning. So here is how it reasoned through the question above in 44 seconds (under a minute, although I don’t know how to limit its thinking time right now):

| Identifying key characteristics I’m focusing on a person born in the 1930s, whose work is described as a ceiling for wide audiences, yet not preventing the consumption of more elite art. The task is to find this individual’s last name. Unraveling clues I’m digging into a quiz clue, parsing it creatively to identify a person from the 1930s whose work represents a broad artistic ceiling, hinting at “glass ceiling” or the “Sistine Chapel ceiling” as potential leads for further exploration. Piecing together I’m gathering info on Philip Glass, examining connections to “Glass Ceiling” and the Heisenberg Uncertainty Principle. Considering his work’s accessibility and its metaphorical implications. Weighing possibilities I’m gathering clues about an artist from the 1930s with mainstream appeal. Considering the “no one is stopping you” phrase and connections like “Glass Ceiling,” “Glass,” or “Peter Max.” Analyzing the phrase Considering various interpretations like physical barriers, metaphors for invisible obstacles, and literal elements of ceilings or artists named “Ceiling”. Revisiting artists and interpretations I’m brainstorming artists and interpretations, considering Michelangelo’s Sistine Chapel, the movie “Glass Ceiling,” the Beatles, and “Ceiling Cat.” Pondering cultural icons I’m reflecting on cultural icons like Andre Rieu and the “Mona Lisa,” considering how they might symbolize the pinnacle of mainstream audience art. Weighing the details I’m thinking through the idea that the person’s work, while accessible to wide audiences, may still hold a glimmer of something more exclusive, hinting at a deeper connection or higher tier. Weighing options I’m noticing the possibilities involve John Williams, Andy Warhol, Frank Lloyd Wright, Norman Rockwell, or James Cameron, among others, by examining birth years and the “ceiling” clue. | Examining possibilities I’m thinking through the clue “No one is stopping you from consuming more elite art,” which might imply a barrier or wall. It’s worth considering artists like Glass or Wall, or even the term “Ceiling” to explore. Weighing choices I’m mapping out notable artists born in the 1930s, like Philip Glass and Yoko Ono, and considering the Japanese word for roof, “Ten.” Adjusting my approach Thinking through artists like Richard Serra and John Cage, but considering the phrase “No one is stopping you in principle from consuming more elite art”? Evaluating limits and creators Thinking about “Glass Ceiling” and its connotations with limits and barriers, I’m considering artists, authors, and cultural references, especially those born in the 1930s, to identify the intended creator. Piecing together clues I’m considering artists or figures whose work defines a cultural peak, possibly “Block” or “Lid” in their names. The moon angle hints at Neil Armstrong, born in 1930. Piecing together I’m examining various artists and subjects, like Carl Sagan and Robert Smithson, to understand the clue’s connection to “ceiling” and “Space.” Connecting concepts It’s interesting to see how principles like the Principle of Uncertainty or ideas like Superconductivity might align with the clue, though they seem less likely for now. Weighing possibilities OK, let me see. I’m thinking about whether the clues point to Murdoch or Brecht, considering Rupert Murdoch’s birth and his connection to media, or Brecht’s fourth wall concept. Weighing possibilities I’m mapping out an artist connected to the 1930s or an uncertainty principle, considering figures like “Heisenberg” or others associated with paradoxes. Piecing together musical influences I’m gathering info on Philip Glass, whose minimalist compositions have a broad appeal yet maintain an elite essence. This aligns with the idea of a “Glass Ceiling” in art. |

Note how although the model has arrived at the correct answer fairly quickly, it has also generated a lot of interesting and creative possibilities, including:

This is exactly how a good player would try to approach this question, and this is exactly what all models so far, including GPT-4o, the direct predecessor of o1-preview, are failing at. I asked GPT-4o the question above, regenerating the answer several times; while it was clear from its explanations that GPT-4o did understand the question, the answers I obtained were Alfred Hitchcock, Walt Disney, Andy Warhol, and Stanley Kubrick. GPT-4o definitely “knew” that Disney and Hitchcock had both been born much earlier than the 1930s but still gave these answers, with the most popular answer being Alfred Hitchcock (5 times out of 8); several times, GPT-4o explicitly wrote that Hitchcock was born in 1899 but still gave this answer.

So what’s so special about o1-preview? Let’s try to find out.

As usual with modern state of the art LLMs, there is little information explicitly given by OpenAI about the structure of its o1-preview model or its training regime. This is natural from the commercial point of view and probably a good thing from the security point of view (recall our post on the dangers of AI), but the net result is that we, like all outside experts, are mostly reduced to guesswork, with the only definitive sources being OpenAI’s announcement post and the OpenAI o1 System Card, which is safety-oriented and does not provide further information about the model itself.

The post vaguely gestures at being better at chain of thought reasoning. I hope to roll out a detailed post on chain of thought techniques in the near future, but, alas, so far it doesn’t look like the o1 family will meaningfully contribute to the scientific part of it. In the quote below, the only load-bearing words are “reinforcement learning”:

Through reinforcement learning, o1 learns to hone its chain of thought and refine the strategies it uses. It learns to recognize and correct its mistakes. It learns to break down tricky steps into simpler ones. It learns to try a different approach when the current one isn’t working. This process dramatically improves the model’s ability to reason.

I don’t have a personal opinion on what exactly this reinforcement learning method is. It could be RLHF applied to chains of internal reasoning. Or it could be something more involved. For example, Subbarao Kambhampati offers a very interesting speculation; he suggests that the o1 family learns to reason in a way similar to how AlphaZero learns to play board games, with text continuations playing the role of moves and “game results” being correct answers as evaluated by external LLMs. For a collection of this and other speculations, see an excellent (as always) survey post by Zvi Mowshowitz.

Whatever the details, the result is that o1-preview introduces a whole new paradigm to large language models. We have discussed many times (here and here, for instance) that LLMs are token prediction machines: they take in as much context as they can (again, see my previous post on context) and then produce the output token by token, autoregressively, never looking back.

The o1 family are still LLMs, but they produce many different outputs, check out different possibilities, combine the results, and generally “think through” the problem. This idea is not novel in itself—it is exactly the premise of chain of thought techniques. But OpenAI could make it work on an unprecedented scale. Look at the plot with the scaling law they report; the Y-axis shows the USA Math Olympiad (AIME) results and the X-axes are two different computational budgets:

The plot on the left is standard: more train-time computation leads to better performance with a log-linear dependency. But the plot on the right is completely novel: it shows that o1 models can actually make good use of test-time (inference) computational resources! Basically, it means that the longer you allow an o1 model to think about a problem, the better it does; I don’t think a scaling plot like that has ever been achieved before with LLMs.

Naturally, this leads to increased costs; as you probably have already noticed, o1-preview comes with some rather strict constraints on usage and steep prices for API access. But costs have a tendency to decrease over time due to algorithmic improvements and cheaper hardware, while peak performance, once achieved, stays with us forever.

And the performance jumps are very impressive. I mostly devote this post to WWW since this is my personal example where I can add something new to the discussion, but answering trick questions is definitely not the most productive use of o1-preview’s computational resources. Here is the performance comparison reported by OpenAI:

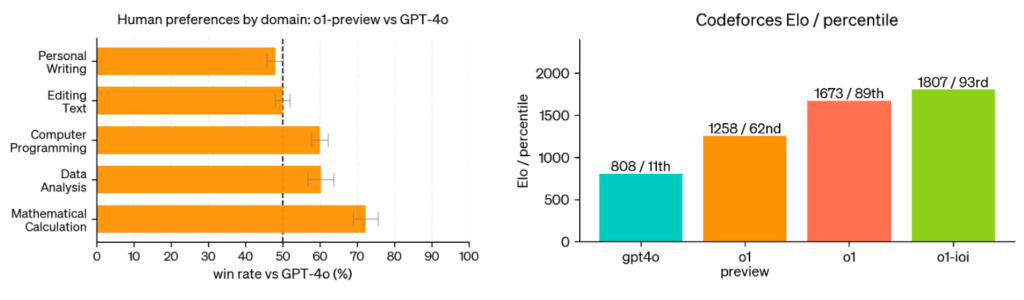

In all three cases, o1 blows GPT-4o out of the water. GPT-4o could solve some high-level mathematical Olympiad problems but o1 makes it to the Olympiad, scoring among top 500 participants in the US this year. I’d love to see Claude Opus and Gemini 1.5 Pro on this plot since they are better at math than GPT-4o, but I don’t believe they would be as competitive. Coding has much improved, with o1 breezing through advanced competitive coding problems. Finally, the GPQA Diamond dataset (Rein et al., 2023) is not a high school science test; it contains questions that human Ph.D. students in the corresponding fields tend to answer with 65-75% accuracy when given full access to Google and over 30 minutes of time. On this test, o1 exceeded human expert level.

Here is a more detailed breakdown of various categories and benchmarks:

Note that additional reasoning power almost doesn’t help at all in tests on the English language, public relations, the basic SAT test, and English literature: the o1 model is not better at writing than GPT-4o. Indeed, if you ask humans which model they prefer, in terms of writing and editing they are completely equivalent:

But in anything that requires reasoning, formal logic, and especially long chains of derivations, o1 is just much, much better. Looking at the performance plots, it is hard to believe that o1 is not a new level of LLMs (that is, GPT-5) but just a novel way to fine-tune the same “4-level” LLMs that have been around for more than a year. Still, this is exactly what happened, and this same method of improvement would probably apply to a new generation of LLMs as well.

With this, let us go back to the game of questions.

People have been writing questions for the sports version of WWW since the 1980s. Starting from the 1990s, questions have been collected in a large database, published at “The WWW questions database”. The interface is a little dated, and there is also a more up-to-date database at “Got questions”. You can easily scrape both websites, and back when I was trying to apply NLP models at scale there was no problem to contact the maintainers and obtain a dump of the database directly.

But, of course, virtually all of the questions are in Russian. This is not a problem for o1-preview, it’s perfectly capable to play in the original Russian. But if you want to translate the questions and create a dataset for the English speaking world to understand, you run into a lot of trouble.

In this post, examples are in English because I have translated them. I did not try too hard, I just looked through my questions in the database and chose the ones that would be easy to translate. And as I was filtering the questions, I was only choosing about 1 out of 5 for translation; if I really tried my best I would maybe end up with one out of three or so. The rest would be wordplay in Russian, references to Russian language culture little known among people who don’t speak the language, references to exact quotes in Russian, and so on. I obviously can’t show you the wordplay, but here are a couple of examples that can survive in translation but that I wouldn’t use for an English speaking audience.

Here are the answers:

More recent questions are on average easier to translate, as WWW is turning more and more towards Russian speaking people in other countries who do not have this exact cultural background characteristic of Soviet high schools. But there is still a lot of untranslatable wordplay and exact quotes.

Therefore, I believe it still requires human effort to choose translatable questions and then translate them, and I hope that an effort like that could be organized. This would be a great dataset for LLM testing, and also—who knows—it may get English speakers to become interested in the game! I hope to organize this effort at some point, and if you are interested in helping out please contact me directly.

Last week, I spent a whole day asking WWW questions to LLMs. I did it in part to enter some of the questions to the “Humanity’s Last Exam” dataset, an interesting initiative by the Center for AI Safety and Scale AI (the announcement was given by Dan Hendrycks and Alexandr Wang). The interface of the exam very conveniently asks the question to five top of the line LLMs: GPT-4o, Claude Sonnet 3.5, Google Gemini 1.5 Pro, o1-mini, and o1-preview.

Naturally, I won’t give out the examples where all LLMs were wrong because they became my submissions to the dataset. But I want to show several interesting cases where only o1-preview could get it right. I used only questions written by myself, so the style is a bit dated since my days as an active WWW question author were in 2005-2015, and I also made the style slightly more formal in translation so that the answer would be defined more precisely to allow for automated checking. Here is a table with the answers of all five models; I abridged the explanations but kept their main ideas and commented a little in square brackets:

| Question. A sports journalist wrote about a famous hockey player Vasily Trofimov: “He changed the tempo a lot, and it looked like he could speed up with no limit”. Next the journalist mentions a person who visited Russia in 1842 and 1843. Write the last name of this person. | |

| Dumas. …The key to identify is the hint given by specific years “1842 and 1843”. During that period, a renowned historical figure, the French writer Alexander Dumas, visited Russia. The reference in the journalist’s statement likely ties to Dumas because mentioning a historical visitation without a clear context suggests a notable person whose travels were significant in that era… [Dumas indeed visited Russia, in 1858-1859 in fact but that’s close enough to introduce some confusion.] | |

| Dumas. …This sudden change of topic suggests that we need to look for a connection between hockey and a 19th century visitor to Russia… a “Dumas drop pass” is a move where a player unexpectedly drops the puck for a teammate trailing behind… named after Alexander Dumas, likely due to its surprising and deceptive nature, which mirrors the unexpected plot twists in Dumas’ novels… [The “Dumas drop pass” is a pure (and quite beautiful) hallucination, I couldn’t google anything even close to “Dumas” in relation to drop passes.] | |

| Gogol. …a hockey player who could “speed up with no limit”… is likely a reference to the famous Russian author Nikolai Gogol and his short story “Nevsky Prospekt”… which features a character vanishing into thin air, much like he’s moving with infinite speed… in 1842 and 1843… Gogol was living [in Russia]… [Here Gemini is being too creative for its own good: Gogol did live in Russia but it would be incorrect to say that he “visited Russia”, this clearly suggests that the person was a foreigner] | |

| Stephenson. …Given the context of changing tempo and speed, it may metaphorically relate to advancements in transportation or engineering during that era. George Stephenson, known as the “Father of Railways,” was a prominent figure whose work significantly influenced railway development, which aligns with the themes of speed and tempo changes… [That’s quite creative but, of course, Stephenson is not a person who would embody high speed for the reader, he might be a reference for engineering ingenuity or something like that] | |

| Liszt. …Liszt was a renowned virtuoso pianist and composer known for his technical prowess and compositions that featured rapid tempo changes and extreme speed, such as his “Transcendental Études.” The word “transcendental” suggests going beyond limits, linking to the idea of speeding up with no limit. Additionally, Liszt visited Russia in 1842 and 1843, where he performed concerts in cities like St. Petersburg and Moscow… [This is completely correct; o1-preview even added an extra reference to “Transcendental Etudes” that I never intended but that does indeed fit the question quite well. I don’t think o1-preview simply googled Liszt’s concerts because if so, why didn’t GPT-4o and o1-mini do the same?] | |

Question. In this question, X stands for a different word. A World War II poster said that X was easy to get. Another poster from the same era promised to win a victory over X. Answer in one word, what was the main weapon of this victory? | |

| cryptography… “X” refers to something negative or harmful that the government or military campaigns were trying to combat. In this context, “X was easy to get” likely refers to enemy information or secrets… the most renowned weapon for intelligence and communication security was the “Enigma” machine and the efforts surrounding “cryptography.” [The slogan “[Military] secrets are easy to get” would make no sense at all; GPT-4o misses the main point that the slogan should be some kind of joke or at least something memorable and, pardon the pun, catchy.] | |

| Silence …Given that X is described as “easy to get” and something that needed to be defeated, it’s highly probable that X stands for “information” or more specifically, “loose lips.” This refers to the famous WWII slogan “Loose lips sink ships”… The main weapon to combat this problem would have been silence or secrecy… [Same as above: “Loose lips sink ships” makes perfect sense as a war propaganda slogan; “loose lips are easy to get”, not so much.] | |

| Bonds. World War II propaganda often used fear tactics. An intangible enemy that is “easy to get” and needs to be defeated likely refers to a negative emotion or behavior. Considering the context, this was likely something that could hinder the war effort. [I think Gemini 1.5 simply didn’t come up with anything believable in this case, the reasoning is very unspecific and doesn’t make much sense. Exactly how I would describe my reasoning when we don’t get the question right but I still need to submit some kind of answer] | |

| Atomic During World War II, propaganda posters often emphasized the ease of achieving certain goals to boost morale and support for the war effort. The main weapon that was pivotal in ensuring victory was the atomic bomb, which promised a decisive end to the conflict. [Here o1-mini forgets all about the slogan, and even kind of misses the formal side of the question: “atomic” is not a valid answer to the question “what was the main weapon”, and “atomic bomb” would be two words.] | |

| Penicillin. During World War II, there were posters warning soldiers about the dangers of venereal diseases (VD), with slogans like “VD is easy to get” to highlight how easily one could contract such diseases… The main weapon in combating venereal diseases at that time was the antibiotic penicillin, which was mass-produced during the war and used effectively to treat infections like syphilis and gonorrhea among soldiers. [This is totally correct; o1-preview gets the joke of the slogan and reasons correctly that penicillin entered mass production during WWII so it could also be the subject of propaganda posters. Here, again, o1-preview probably did not “know” the exact poster because it didn’t specify the exact disease] | |

| Question. Consider the equality: 11+2=12+1. One of the ways to write this equality, discovered by Martin Gardner, appears in a list of the most beautiful… answer in one word, most beautiful what. | |

| equations Martin Gardner was renowned for his work in recreational mathematics and puzzles. The equality “11 + 2 = 12 + 1” seems mathematically incorrect at first glance, but this type of equality often involves a playful twist or insight that isn’t immediately obvious, typical of Gardner’s style. This format is frequently used in his mathematical puzzles or games, known for their elegance and creativity, which are often praised for their aesthetic appeal. [GPT-4o knows who Martin Gardner was but the reasoning doesn’t make sense: does this equality seem mathematically incorrect to you?..] | |

| equations …The equation 11 + 2 = 12 + 1 can be written in a visually appealing way that makes it appear as if the numbers themselves are balancing. This representation is often included in lists of the most beautiful mathematical equations or expressions… [I suppose any equation can be written in a way “as if the numbers themselves are balancing”. Claude Sonnet also doesn’t make the necessary jump outside mathematics.] | |

| equations Martin Gardner was a famous recreational mathematician known for his clever mathematical puzzles and games. The equality 11 + 2 = 12 + 1, while simple, could be written in a more visually appealing or conceptually interesting way if presented as a puzzle or rearranged in a pattern. This kind of playful approach to math is what Gardner was famous for. [Gemini again usefully gives an explanation that clearly suggests that it didn’t get the question right and it knows it: no specifics, just conjecture about a “visually appealing pattern”.] | |

| Anagrams The equation “11 + 2 = 12 + 1” is notable because the words “eleven plus two” are an anagram of “twelve plus one.” Martin Gardner highlighted this clever wordplay, recognizing it as a beautiful example of an anagram in the context of mathematical expressions. [In this case, both o1-mini and o1-preview got it right. Judging by the explanations, it could be direct knowledge, but then, again, why didn’t GPT-4o have this knowledge?..] | |

| Anagrams Martin Gardner discovered that “Eleven plus two” is an anagram of “Twelve plus one.” This clever rearrangement forms the equality 11 + 2 = 12 + 1 when written out in words, and the phrases are perfect anagrams of each other. This particular anagram is often cited in lists of the most beautiful anagrams due to its mathematical elegance and linguistic symmetry. | |

| Question. Later the professor married his Ph.D. student, who turned out to be the only person who openly protested. Which university was she a student of? | |

| Not determinable from the given information The statement provides information about a professor who married his Ph.D. student, and it mentions that this student was the only person who openly protested. However, the statement does not provide explicit details or context regarding names, places, or events that would identify the university she attended. Without additional specific context or information that ties this scenario to a known public case or story, it is not possible to determine the name of the university. | |

| Insufficient information This question appears to be referring to a specific situation or story that is not provided in the context. Without more information about the professor, the student, or the event being described, it’s impossible to determine which university the student attended. The question assumes knowledge of a particular narrative or incident that hasn’t been shared. | |

| Unknown This question cannot be answered. There is no information provided about a professor marrying his Ph.D. student or any protests. | |

| I’m sorry, but I couldn’t find information regarding the specific university associated with the professor and his protesting Ph.D. student. If you can provide more details, I’d be happy to help further. Based on the information provided, there isn’t a well-documented or widely recognized case involving a professor marrying his Ph.D. student who was the only person to openly protest. Without additional context or specific details, it’s challenging to identify the exact university in question. | |

| Stanford University The professor referred to is Philip Zimbardo, who conducted the Stanford Prison Experiment in 1971 at Stanford University… Christina Maslach, a Ph.D. student at Stanford and Zimbardo’s girlfriend at the time, was the only person who openly protested the ethical implications of the experiment. She confronted Zimbardo… Later, Zimbardo and Maslach married. [This could be direct knowledge (although, again, why didn’t GPT-4o and o1-mini have it? knowledge is nothing if you can’t access it when needed), but this is such a great example — every single model explicitly gives up and calls the question impossible, but o1-preview reasons its way through!] | |

As you can see, o1-preview is indeed a big step forward. This post has been devoted to the “What? Where? When?” game but more practical things like answering hard science questions, solving mathematical problems, and writing code are much improved too. What’s even more exciting is that with o1-preview, OpenAI is showing how to scale the models not only with respect to the size of the training data and computational power spent on training but also with respect to resources and time spent on inference. You could say that o1-preview has learned to actually think about a question rather than just generate the answer immediately.

This new scaling curve could be part of the “unhobbling” as discussed by Leopold Aschenbrenner in his recent Situational Awareness book (Aschenbrenner, 2024; highly recommended, by the way—it was not yet released by the time of my post on AI dangers but I would certainly discuss it in detail if it were), or it could be a new scaling law on top of that, speeding up AI capabilities development even further. Only time will tell, and it will be some of the most interesting and exciting times in the history of humanity.

I will leave you with a quote from Sam Altman’s blog post “The Intelligence Age”, published on September 23. Mr. Altman definitely knows how to ride hype waves but in this case, I tend to believe he is absolutely, scaringly honest:

This may turn out to be the most consequential fact about all of history so far. It is possible that we will have superintelligence in a few thousand days (!); it may take longer, but I’m confident we’ll get there.

Sergey Nikolenko

Head of AI, Synthesis AI