AI Safety IV: Sparks of Misalignment

This is the last, fourth post in our series...

Facial identification and verification for consumer and security applications.

Activity recognition and threat detection across camera views.

Spatial computing, gesture recognition, and gaze estimation for headsets.

Millions of identities and clothing options to train best-in-class models.

Simulate driver and occupant behavior captured with multi-modal cameras.

Simulate edge cases and rare events to ensure the robust performance of autonomous vehicles.

Together, we’re building the future of computer vision & machine learning

Facial identification and verification for consumer and security applications.

Activity recognition and threat detection across camera views.

Spatial computing, gesture recognition, and gaze estimation for headsets.

Millions of identities and clothing options to train best-in-class models.

Simulate driver and occupant behavior captured with multi-modal cameras.

Simulate edge cases and rare events to ensure the robust performance of autonomous vehicles.

Together, we’re building the future of computer vision & machine learning

Facial identification and verification for consumer and security applications.

Activity recognition and threat detection across camera views.

Spatial computing, gesture recognition, and gaze estimation for headsets.

Millions of identities and clothing options to train best-in-class models.

Simulate driver and occupant behavior captured with multi-modal cameras.

Simulate edge cases and rare events to ensure the robust performance of autonomous vehicles.

Together, we’re building the future of computer vision & machine learning

Facial identification and verification for consumer and security applications.

Activity recognition and threat detection across camera views.

Spatial computing, gesture recognition, and gaze estimation for headsets.

Millions of identities and clothing options to train best-in-class models.

Simulate driver and occupant behavior captured with multi-modal cameras.

Simulate edge cases and rare events to ensure the robust performance of autonomous vehicles.

Together, we’re building the future of computer vision & machine learning

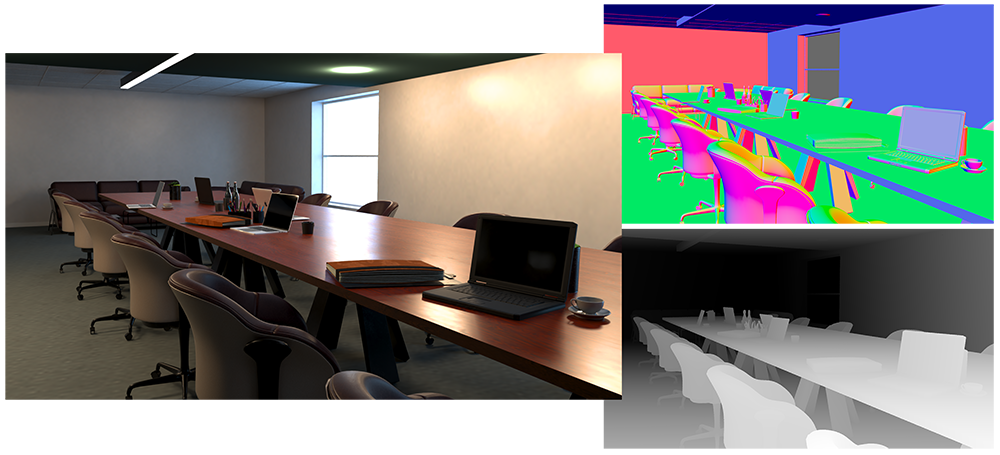

Synthetic data for computer vision to enable more capable and ethical AI.

Building AR/VR/XR applications requires knowledge of the surrounding 3D environment. Understanding the distance to objects, their material properties, and lighting is key to creating realistic virtual objects that interact accurately with the environment. For immersive applications, it’s important to understand what the user is looking at and experiencing. Obtaining accurately labeled 3D datasets for creating these core models has been expensive and difficult.

Synthetic data from Synthesis AI helps ML engineers create datasets for training models in applications across a wide range of industrial and consumer AR, VR and XR hardware. Synthesis Humans is ideal for models involving ML tasks such as eye tracking and gaze estimation for individual people, and Synthesis Scenarios can be used to generate labeled image and video datasets for complex scenes with multiple people and different environments.

Synthesis AI is the technology leader for creating realistic digital humans. Our digital human datasets support the many different types of avatar characteristics, including skin tones, gestures, and facial expressions, needed for next generation virtual innovation in the Metaverse.

We focus on the fine details of human movement and characteristics, as well as human interactions with a given environment, to support the development of augmented and virtual reality applications, avatars and experiences in the Metaverse.

Accurate eye tracking and gaze estimation are essential for creating immersive experiences in AR, VR and XR environments. We offer the ability to create diverse close-up images of the eye in RGB and NIR to enable the development of more accurate gaze estimation models. Programmatically control eye shape, pupil size, gaze direction, blink rate, and other variables, all with detailed sub-segmentation maps and 3D landmarks.

Computer vision systems can detect human emotion through facial expressions, which are often culture- and context-dependent. Recognizing emotion is vital for headset manufacturers and developers building AR, VR, and XR applications.

Learn More About Our Synthetic Data Solutions And Schedule A Demo Today

Take a moment and submit a bit more information about the problem you’re trying to solve with synthetic data, below. We’d love the opportunity to help you build the AR, VR and Metaverse applications of tomorrow.

Obtaining diverse and accurately labeled 3D hand data is difficult and time-consuming. Our system lets computer vision engineers create photorealistic image and video datasets, with the ability to modify hand geometry, skin tone, gestures, accessories, and other characteristics. The datasets produced contain detailed 3D landmarks, depth, and segmentation maps.

AR experiences are dependent on a deep understanding of the spatial environment. Synthetic data provides never-before-annotations such as depth, 3D landmarks, material properties, surface normals, and lighting maps to help computer vision engineers build more accurate spatial and localization models.