AI Safety IV: Sparks of Misalignment

This is the last, fourth post in our series...

Facial identification and verification for consumer and security applications.

Activity recognition and threat detection across camera views.

Spatial computing, gesture recognition, and gaze estimation for headsets.

Millions of identities and clothing options to train best-in-class models.

Simulate driver and occupant behavior captured with multi-modal cameras.

Simulate edge cases and rare events to ensure the robust performance of autonomous vehicles.

Together, we’re building the future of computer vision & machine learning

Facial identification and verification for consumer and security applications.

Activity recognition and threat detection across camera views.

Spatial computing, gesture recognition, and gaze estimation for headsets.

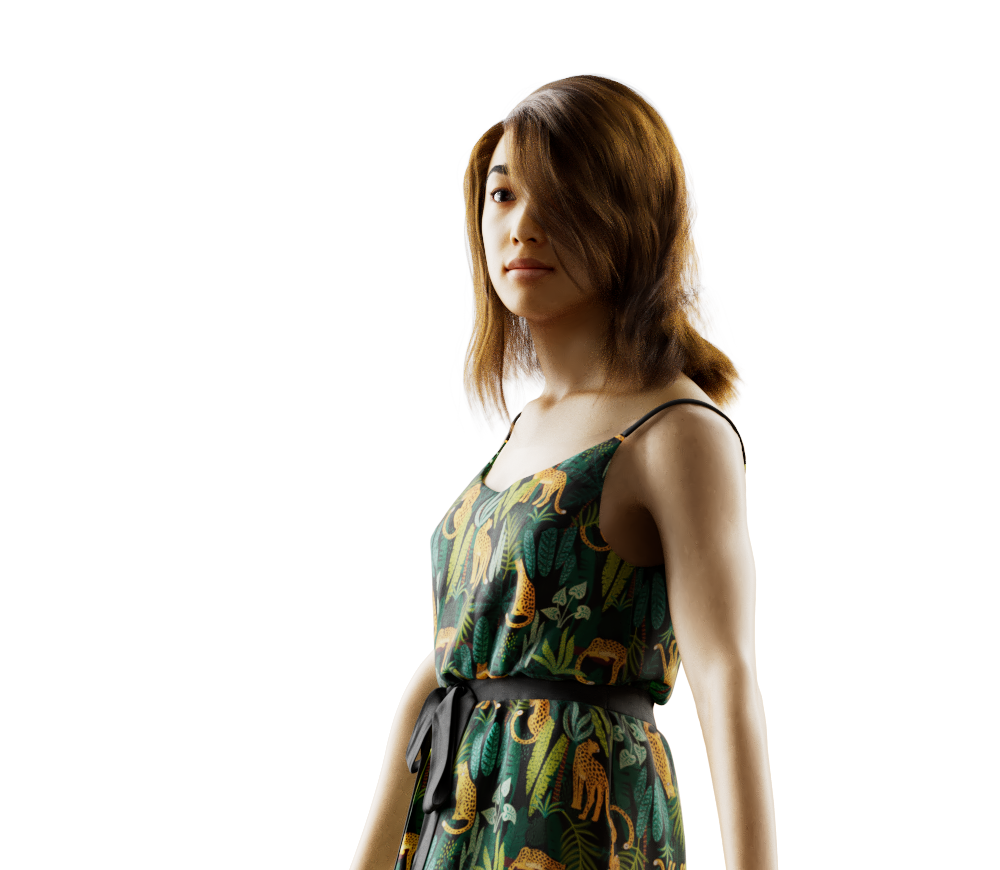

Millions of identities and clothing options to train best-in-class models.

Simulate driver and occupant behavior captured with multi-modal cameras.

Simulate edge cases and rare events to ensure the robust performance of autonomous vehicles.

Together, we’re building the future of computer vision & machine learning

Facial identification and verification for consumer and security applications.

Activity recognition and threat detection across camera views.

Spatial computing, gesture recognition, and gaze estimation for headsets.

Millions of identities and clothing options to train best-in-class models.

Simulate driver and occupant behavior captured with multi-modal cameras.

Simulate edge cases and rare events to ensure the robust performance of autonomous vehicles.

Together, we’re building the future of computer vision & machine learning

Facial identification and verification for consumer and security applications.

Activity recognition and threat detection across camera views.

Spatial computing, gesture recognition, and gaze estimation for headsets.

Millions of identities and clothing options to train best-in-class models.

Simulate driver and occupant behavior captured with multi-modal cameras.

Simulate edge cases and rare events to ensure the robust performance of autonomous vehicles.

Together, we’re building the future of computer vision & machine learning

New augmented reality and virtual reality applications in the Metaverse depend on a detailed representation of how people interact in a wide variety of real-life scenarios. However, Metaverse growth is severely limited by the complexities and challenges of capturing and annotating real-world 3D human data. Sophisticated machine learning models, supporting the AR and VR apps of the future, are driving the need for synthetic data

Synthesis AI is capable of supplying a nearly infinite amount of synthetic data for training ML models in AR/VR software development. The synthetic datasets provide pixel-perfect 3D annotations to help build highly robust digital human models for Metaverse applications at a fraction of the cost and time required today.

Synthesis AI is the technology leader for creating realistic digital humans. Our digital human datasets support the many different types of avatar characteristics, including skin tones, gestures, and facial expressions, needed for next generation virtual innovation in the Metaverse.

We focus on the fine details of human movement and characteristics, as well as human interactions with a given environment, to support the development of augmented and virtual reality applications, avatars and experiences in the Metaverse.

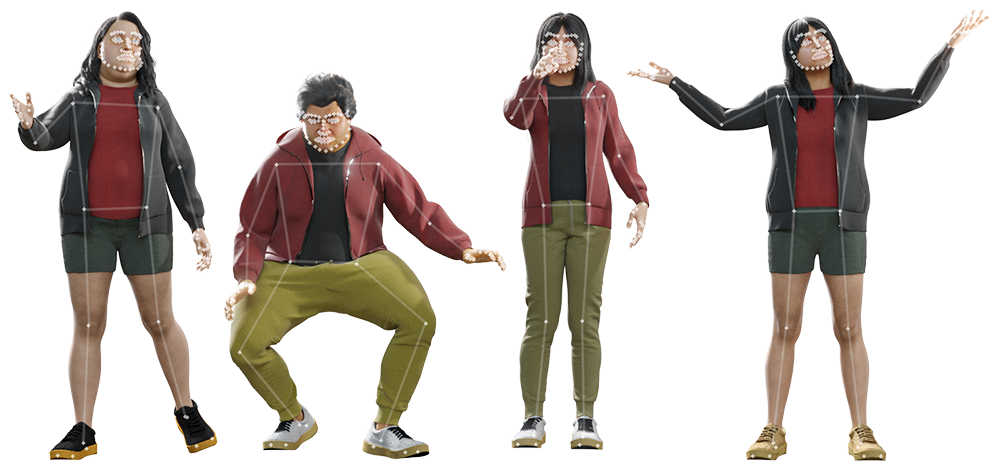

Programmatic control of body landmarks helps build highly efficient and robust pose estimation models. Our pose estimation models are key to recreating lifelike human movements for realistic virtual applications.

Learn More About Our Synthetic Data Solutions And Schedule A Demo Today

Take a moment and submit a bit more information about the problem you’re trying to solve with synthetic data, below. We’d love the opportunity to help you build the AR, VR and Metaverse applications of tomorrow.