AI Safety IV: Sparks of Misalignment

This is the last, fourth post in our series...

Facial identification and verification for consumer and security applications.

Activity recognition and threat detection across camera views.

Spatial computing, gesture recognition, and gaze estimation for headsets.

Millions of identities and clothing options to train best-in-class models.

Simulate driver and occupant behavior captured with multi-modal cameras.

Simulate edge cases and rare events to ensure the robust performance of autonomous vehicles.

Together, we’re building the future of computer vision & machine learning

Facial identification and verification for consumer and security applications.

Activity recognition and threat detection across camera views.

Spatial computing, gesture recognition, and gaze estimation for headsets.

Millions of identities and clothing options to train best-in-class models.

Simulate driver and occupant behavior captured with multi-modal cameras.

Simulate edge cases and rare events to ensure the robust performance of autonomous vehicles.

Together, we’re building the future of computer vision & machine learning

Facial identification and verification for consumer and security applications.

Activity recognition and threat detection across camera views.

Spatial computing, gesture recognition, and gaze estimation for headsets.

Millions of identities and clothing options to train best-in-class models.

Simulate driver and occupant behavior captured with multi-modal cameras.

Simulate edge cases and rare events to ensure the robust performance of autonomous vehicles.

Together, we’re building the future of computer vision & machine learning

Facial identification and verification for consumer and security applications.

Activity recognition and threat detection across camera views.

Spatial computing, gesture recognition, and gaze estimation for headsets.

Millions of identities and clothing options to train best-in-class models.

Simulate driver and occupant behavior captured with multi-modal cameras.

Simulate edge cases and rare events to ensure the robust performance of autonomous vehicles.

Together, we’re building the future of computer vision & machine learning

Last time, we talked about robotic simulations in general: what they are and why they are inevitable for robotics based on machine learning. We even touched upon some of the more philosophical implications of simulations in robotics, discussing early concerns on whether simulations are indeed useful or may become a dead end for the field. Today, we will see the next steps of robotic simulations, showing how they progressed after the last post with the example of MOBOT, a project developed in the first half of the 1990s in the University of Kaiserslautern. This is another relatively long read and the last post in the “History of Synthetic Data” series.

Let’s begin with a few words about the project itself. MOBOT (Mobile Robot, and that’s the most straightforward acronym you will see in this post) was a project about a robot navigating indoor environments. In what follows, all figures are taken from the papers about the MOBOT project, so let me just list all the references up front and be done with them: (Buchberger et al., 1994), (Trieb, von Puttkamer, 1994), (Edlinger, von Puttkamer, 1994), (Zimmer, von Puttkamer, 1994), (Jorg et al., 1993), (Hoppen et al., 1990).

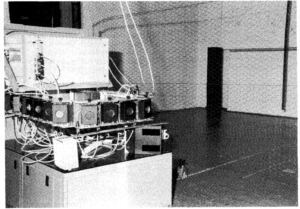

Here is what MOBOT-IV looked like:

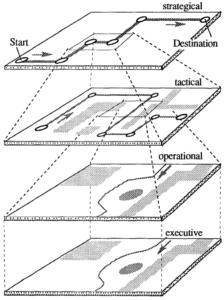

Note the black boxes that form a 360 degree belt around the robot: these are sonar sensors, and we will come back to them later. The main problem that MOBOT developers were solving was navigation in the environment, that is, constructing the map of the environment and understanding how to go where the robot needed to go. There was this nice hierarchy of abstraction layers that gradually grounded the decisions down to the most minute details:

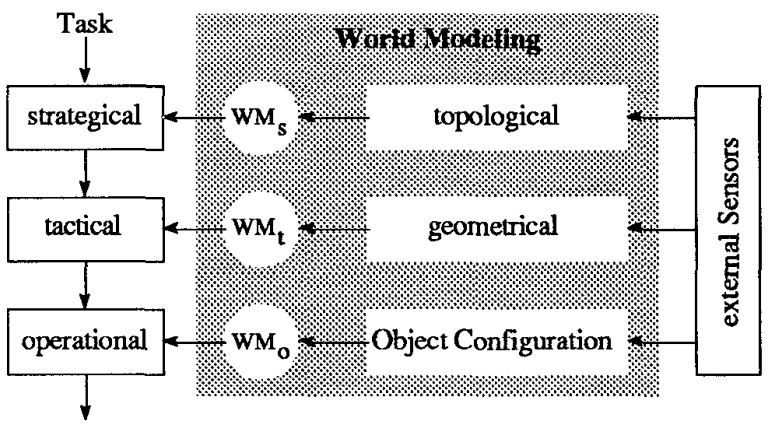

And there were three different layers of world modeling, too; the MOBOT viewed the world differently depending on the level of abstraction:

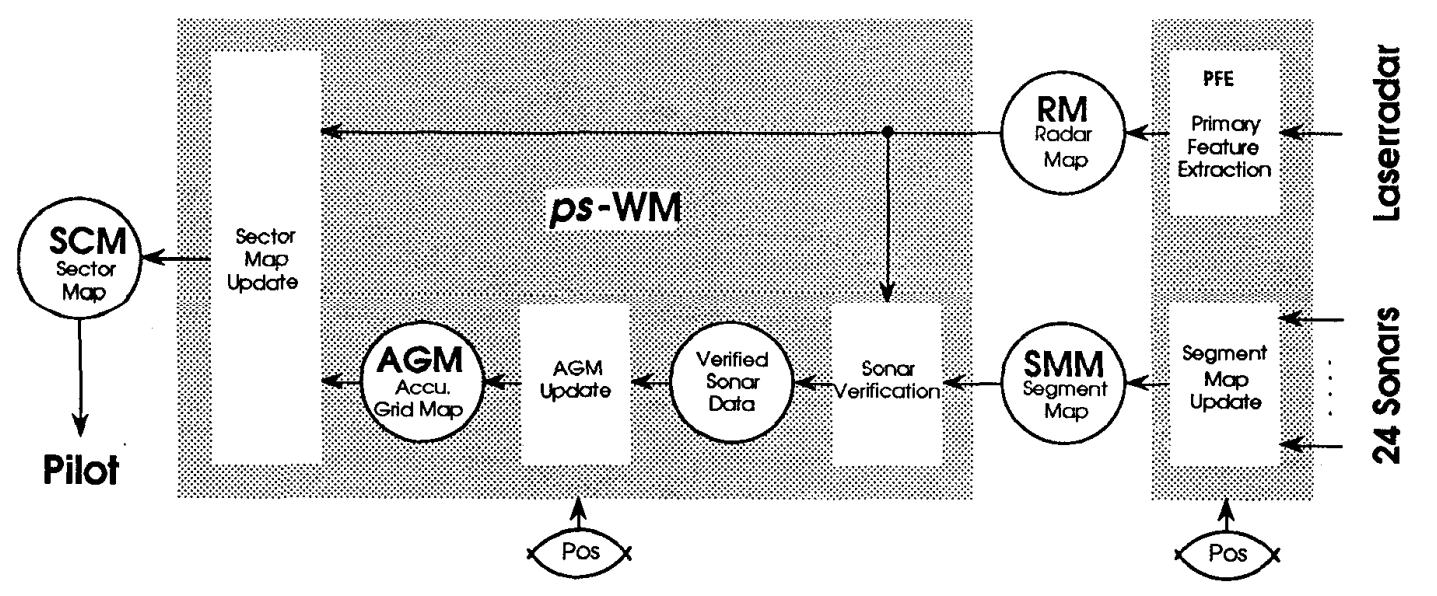

But in essence, this came down to the same old problem: figure out the sensor readings and map them to all these nice abstract layers where the robot could run pathfinding algorithms such as the evergreen A*. Apart from the sonars, the robot also had a laser radar, and the overall scheme of the ps-WM (Pilot Specific World Modeling; I told you the acronyms would only get weirder) project looks quite involved:

Note that there are several different kinds of maps that need updating. But as we are mostly interested in how synthetic environments were used in the MOBOT project, let us not dwell on the detail and proceed to the simulation.

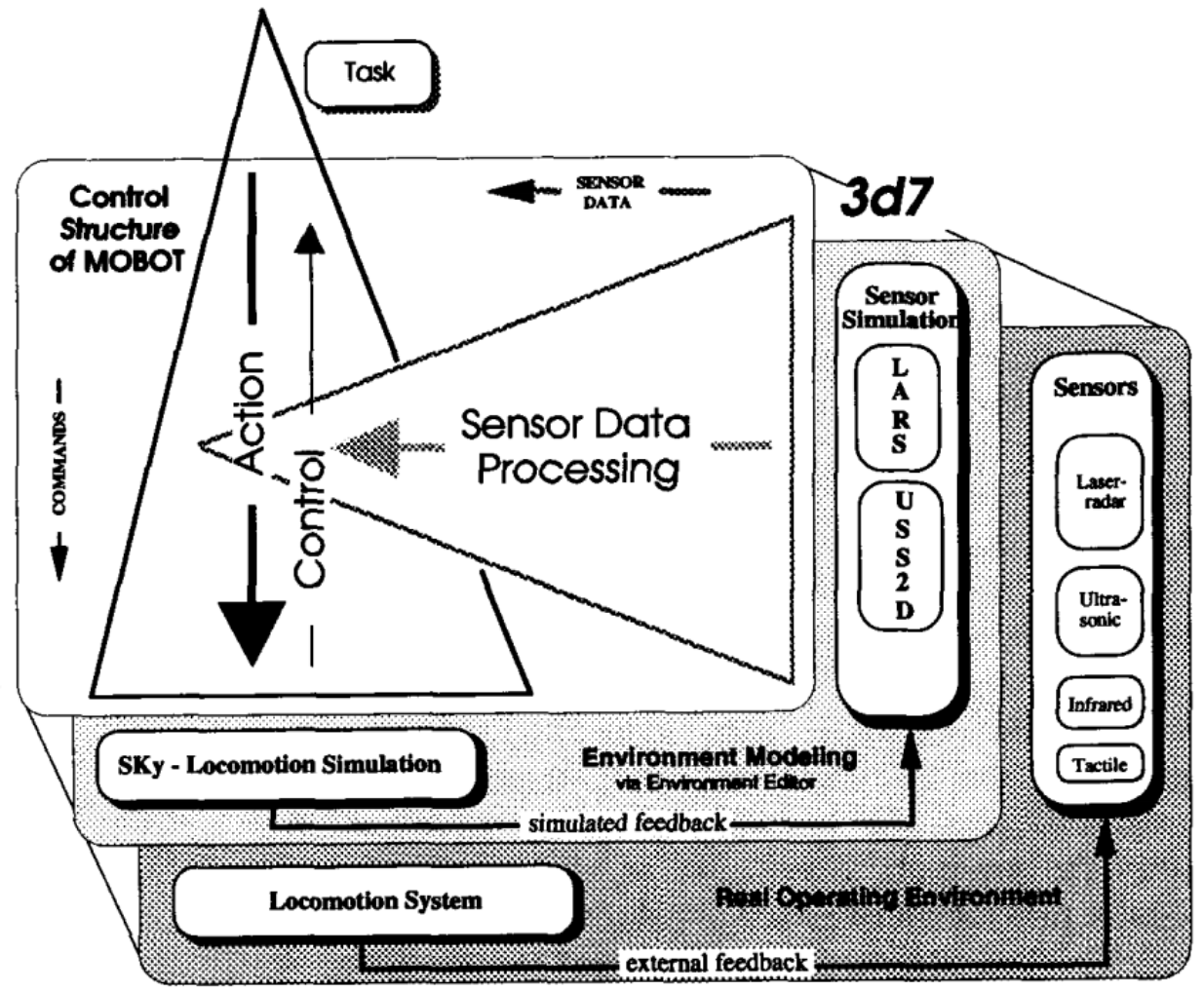

One of the earliest examples of a full-scale 3D simulation environments for robotics is the 3d7 Simulation Environment (Trieb, Puttkamer, 1994); the obscure name does not refer to a nonexistent seven-sided die but rather represents an acronym for “3D Simulation Environment”. The 3d7 environment was developed for MOBOT-IV, an autonomous mobile robot that was supposed to navigate indoor environments; it had general-purpose ambitions rather than simply being, say, a robot vacuum cleaner, because its scene understanding was inherently three-dimensional, while for many specific tasks a 2D floor map would be just enough.

The overall structure of 3d7 is shown on the figure below:

It is pretty straightforward: the software simulates a 3D environment, robot sensors, and robot locomotion, which lets the developers to model various situations, choose the best algorithms for sensory data processing and action control, and so on, just like we discussed last time.

The main point I wanted to make with this example is this: making realistic simulations is very hard. Usually, when we talk about synthetic data, we are concentrating on computer vision, and we are emphasizing the work it takes to create a realistic 3D environment. It is indeed a lot, but just creating a realistic 3D scene it’s definitely not the end of the story for robotics.

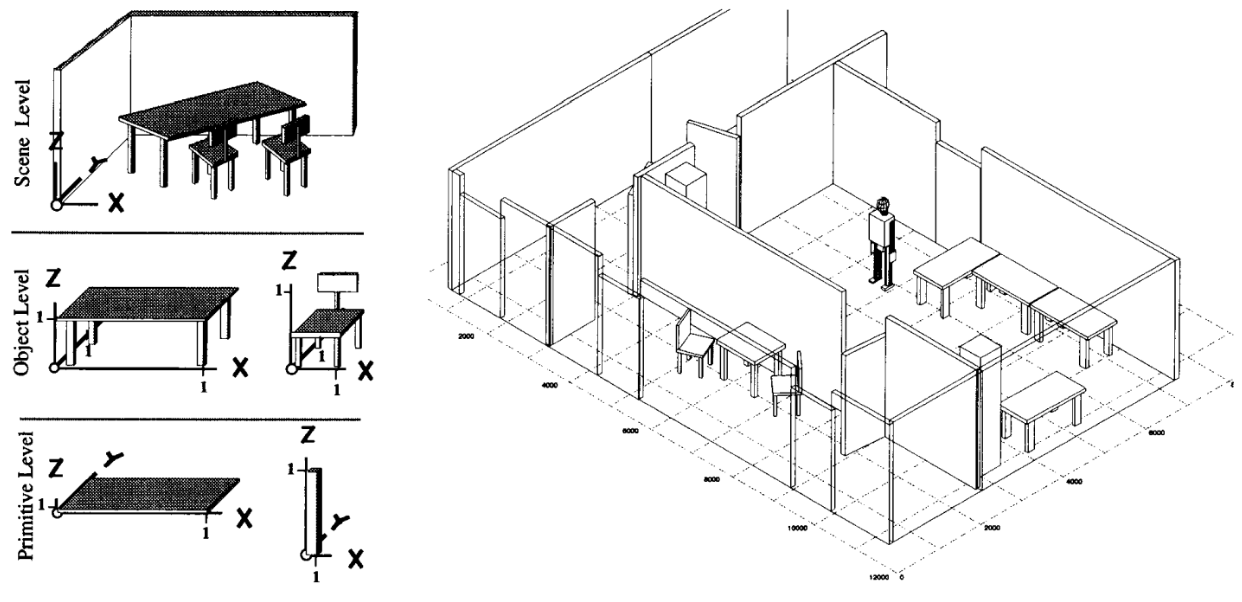

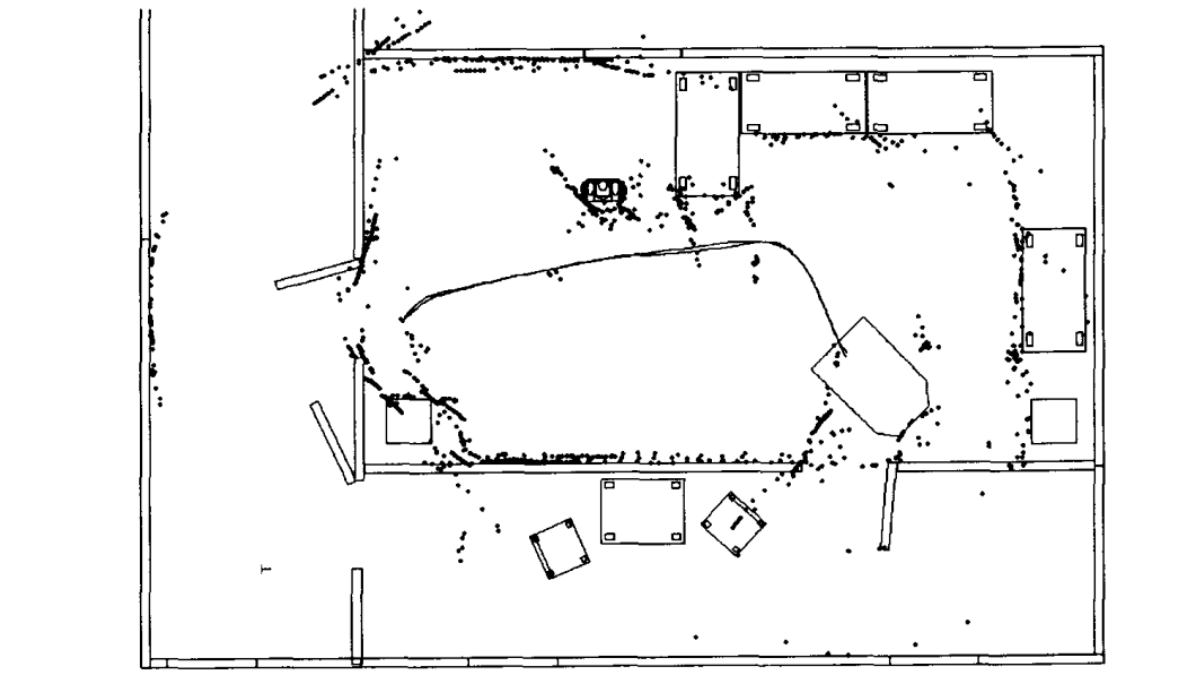

3d7 contained an environment editor that let you place primitive 3D objects such as cubes, spheres, or cylinders, and also more complex objects such as chairs or tables. It produced a scene complete with the labels of semantic objects and geometric primitives that make up these objects, like this:

But then the fun part began. MOBOT-IV contained two optical devices: a laser radar sensor and a brand new addition compared to MOBOT-III, an infrared range scanning device. This means that in order to make a useful simulation, the 3d7 environment had to simulate these two devices.

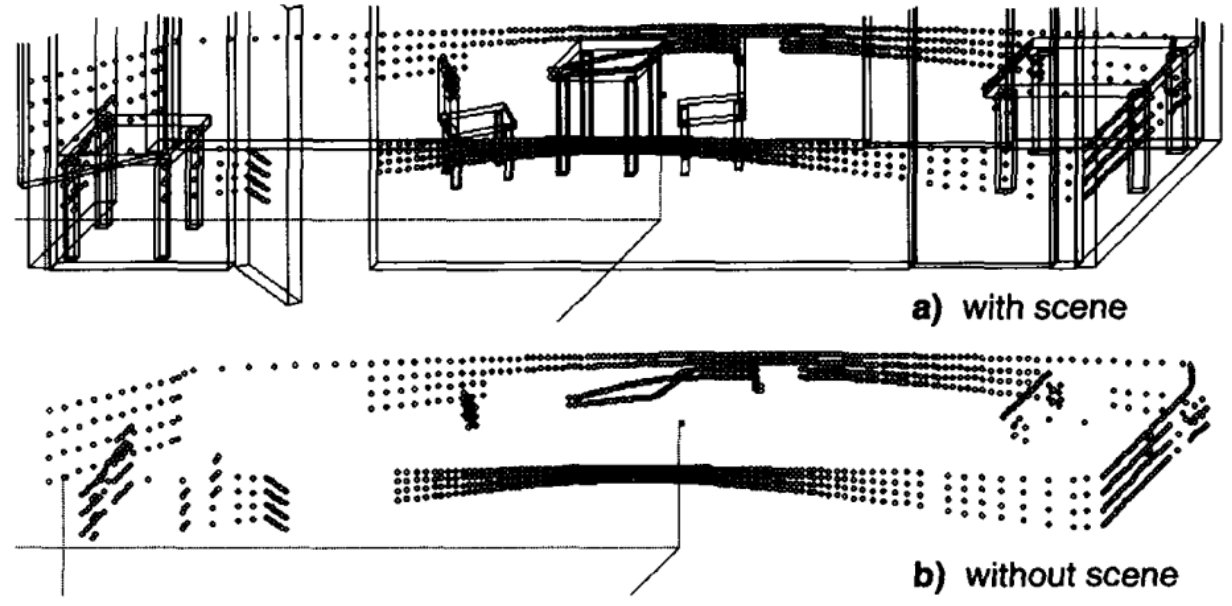

It turns out that both these simulations represent interesting projects. LARS, the Laser Radar Simulator, was designed to model the real laser radar sensor of MOBOT-III and the new infrared range scanner of MOBOT-IV. It produced something like this:

As for sonar range sensors, the corresponding USS2D simulator (Ultrasonic Sensor Simulation 2D) was even more interesting. It was based on the work (Kuc, Siegel, 1987) that takes about thirty pages of in-depth acoustic modeling. I will not go into the details but trust me, there are a lot of details there. The end result was a set of sonar range readings corresponding to the reflections from nearest walls:

This is a common theme in early research on synthetic data, one we have already seen in the context of ALVINN. While modern synthetic simulation environments strive for realism (we will see examples later in the blog), early simulations did not have to be as realistic as possible but rather had to emulate the imperfections of the sensors available at the time. They could not simply assume that hardware is pretty good, they knew it wasn’t and had to incorporate models of their hardware as well.

As another early example, I can refer to the paper (Raczkowsky, Mittenbuehler, 1989) that discussed camera simulations in robotics. It is mostly devoted to the construction of a 3D scene, and back in 1989, you had to do it all yourself, so the paper covers:

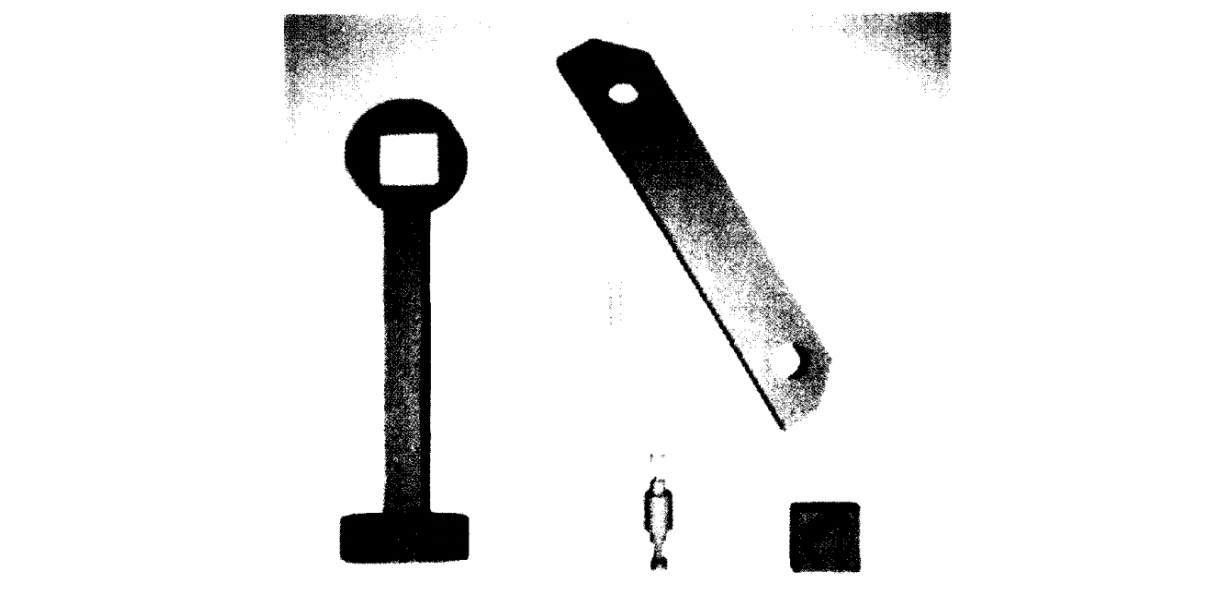

In the 1980s, only after working through all that you could produce such marvelous realistic images as this 200×200 synthetic photo of some kind of workpieces:

Fortunately, by now most of this is already being taken care of by modern 3D modeling software or gaming engines! However, camera models are still relevant in modern synthetic data applications. For instance, an important use case for, e.g., a smartphone manufacturer might be to re-train or transfer its computer vision models when the camera changes, and you need a good model for both the old and new cameras in order to capture this change and perform this transition.

But wait, that’s not all! After all of this is done, you only have simulated the sensor readings! To actually test your algorithm you also need to model the actions your robot can take and how the environment will respond to these actions. In case of 3d7, this means a separate locomotion simulation model for robot movement called SKy (Simulation of Kinematics and Dynamics of wheeled mobile robots), which also merited its own paper but which we definitely will not go into. We will probably return to this topic in the context of more modern robotic simulations: this is a field that still needs to be done separately and cannot be lifted from gaming engines.

The MOBOT project did not contain many machine learning models, it was mostly operated by fixed algorithms designed to work with sensor readings as shown above. Even the 3d7 simulation environment was mostly designed to help test various data processing algorithms (world modeling) and control algorithms (e.g., path planning or collision avoidance), a synthetic data application similar to the early computer vision we talked about before.

But at some point, MOBOT designers did try out some machine learning. The work (Zimmer, von Puttkamer, 1994) has the appetizing title Realtime-learning on an Autonomous Mobile Robot with Neural Networks. There are not, however, neural networks that you are probably used to: in fact, Zimmer and von Puttkamer used self-organizing maps (SOM), sometimes called Kohonen maps in honor of their creator (Kohonen, 1982), to cluster sensor readings.

The problem setting is this: as the robot moves around its surroundings, it collects sensor information. The basic problem is to build a topological map of the floor with all the obstacles. To do that, the robot needs to be able to recognize places where it has already been, i.e., to cluster the entire set of sensor readings into “places” that can serve as nodes for the topological representation.

Due to the imprecise nature of robotic movements we cannot rely on the kinematic model of where we tried to go: small errors tend to accumulate. Instead, the authors propose to cluster the sensor readings: if the current vector of readings is similar to what we have already seen before, we are probably in approximately the same place.

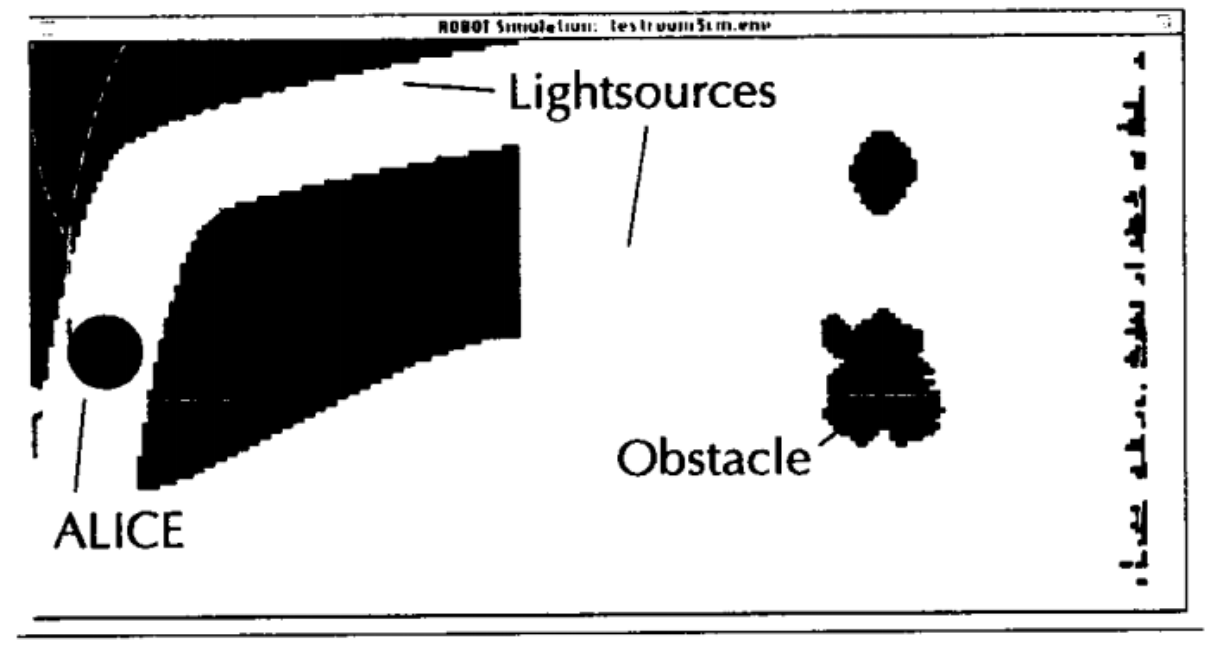

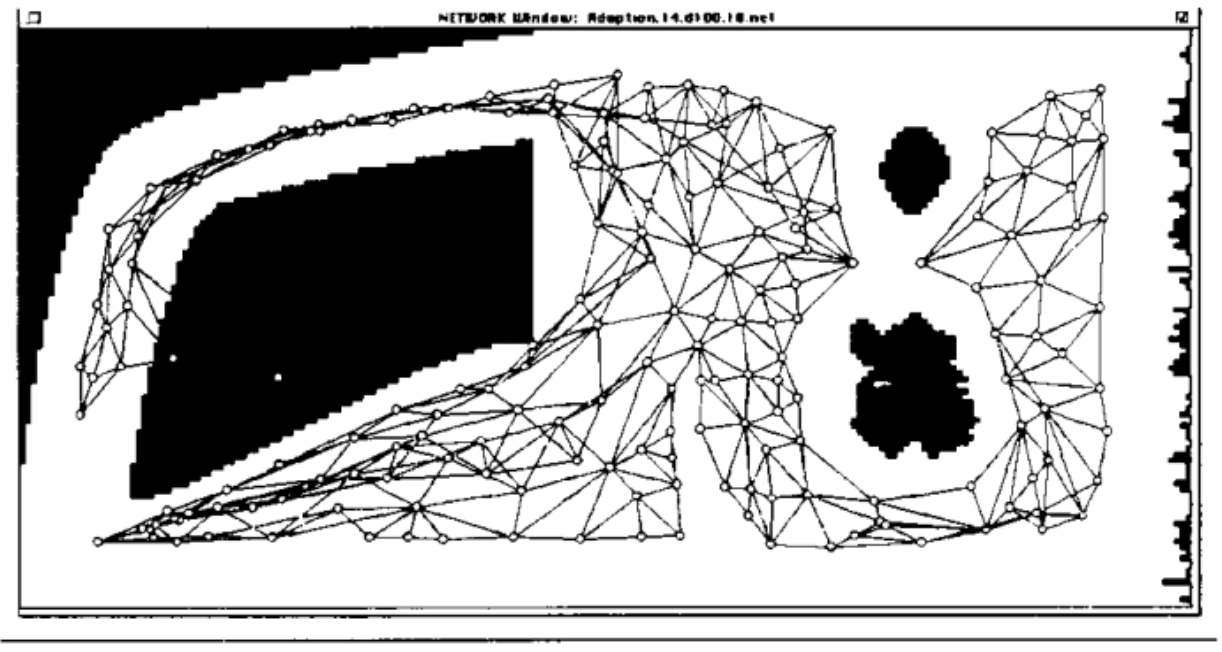

And again we see the exact same effect: while Zimmer and von Puttkamer do present experiments with a real robot, most of the experiments for SOM training was done with synthetic data. It was done in a test environment that looks like this:

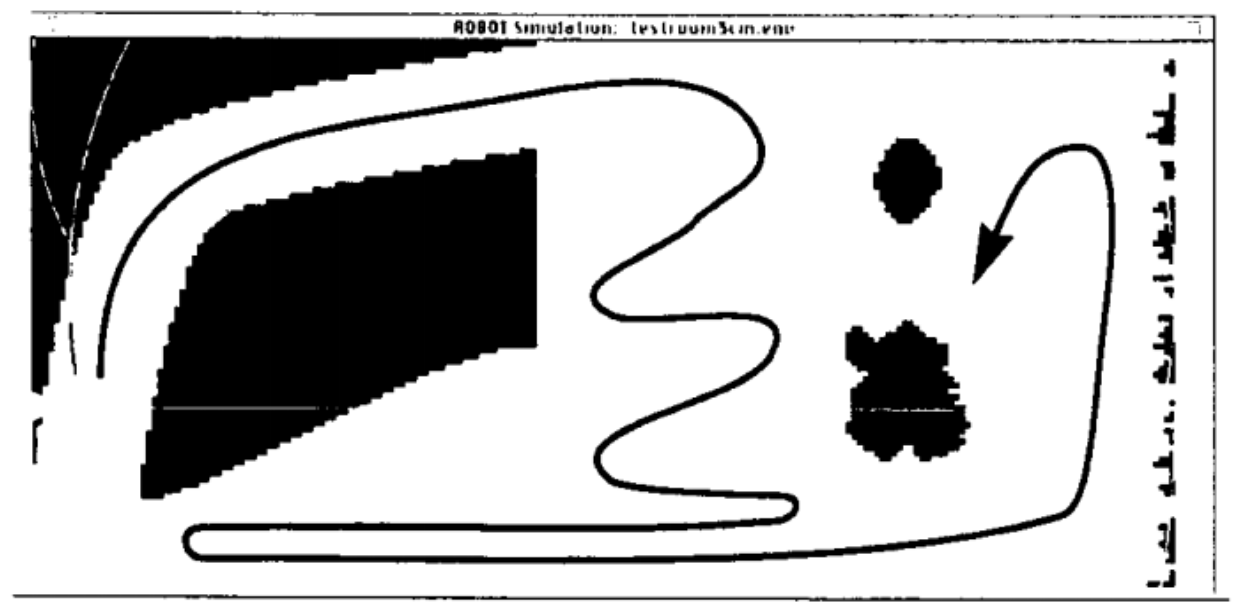

with a test trajectory of the form

And indeed, when the virtual robot has covered this trajectory, SOMs clustered nicely and allowed to build a graph, a topological representation of the territory:

Today, we have seen the main components of a robotic simulation system; we have discussed the many different aspects that need to be simulated and shown how this all came together in one early robotic project, the MOBOT from the University of Kaiserslautern.

This post concludes the series devoted to the earliest applications of synthetic data. We talked about line drawings and test sets in early computer vision, the first self-driving cars, and spent two posts talking about simulations in robotics. All through the last posts, we have seen a common theme: synthetic data may not be necessary for classical computer vision algorithms. But as soon as any kind of learning appears in computer vision, synthetic data is not far behind. Even in the early days, it was used to test CV algorithms, to train models for something as hard as learning to drive or simply for clustering sensor readings to get a feeling for where you have been.

I hope I have convinced you that synthetic data has gone hand in hand with machine learning for a long time, especially with neural networks. In the next posts, I will jump back to something on the bleeding edge of synthetic data research. Stay tuned!

Sergey Nikolenko

Head of AI, Synthesis AI